In the rapidly evolving landscape of artificial intelligence, large language models (LLMs) have emerged as a pivotal development. These advanced computational systems harness the power of machine learning to understand and generate human-like text, pushing the boundaries of AI’s capabilities. From powering chatbots to enhancing predictive text features, LLMs influence various aspects of technology and society, making their study and understanding crucial for any tech enthusiast.

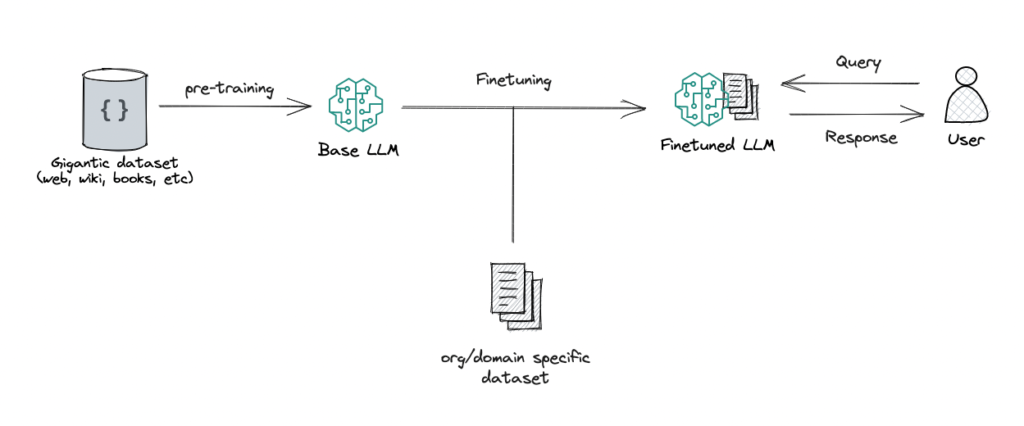

Large language models (LLMs) are advanced AI systems designed to process, understand, and generate human language in a way that mimics human cognitive abilities. At their core, these models leverage vast amounts of data and sophisticated algorithms, including neural networks and the revolutionary Transformer architecture, to deliver unparalleled language processing capabilities. These models are not just limited to understanding or generating text; they are capable of engaging in context-aware conversations, translating languages with high accuracy, and even creating content that ranges from routine reports to creative literature and poetry.

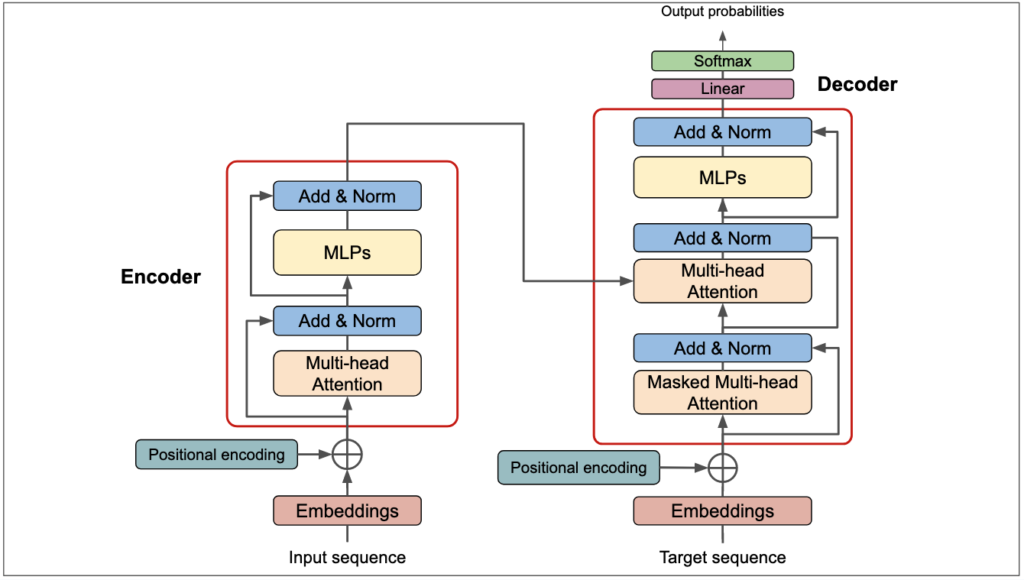

One of the most significant advancements in the development of LLMs is the Transformer architecture, first introduced in the paper “Attention is All You Need” by Vaswani et al. in 2017. This architecture is distinguished by its use of self-attention mechanisms that allow models to weigh the importance of different words in a sentence, regardless of their distance from each other in the text.

Google has been at the forefront of exploiting and enhancing this architecture, utilizing it in their BERT model, which stands for Bidirectional Encoder Representations from Transformers. BERT represents a major leap forward because it processes words about all the other words in a sentence, rather than one by one in order, leading to a much deeper understanding of context.

Large language models (LLMs) have advanced to the forefront of artificial intelligence technology, showcasing a remarkable ability to perform tasks once thought exclusive to human intelligence. These models process and generate human-like text based on the context and training they have received, transforming how businesses and creative fields operate. Here’s a look at the diverse array of tasks that LLMs can handle:

- Writing and Editing Articles: LLMs assist in the creation and refinement of content across various topics, improving both the speed and quality of text production.

- Composing Poetry: Leveraging their understanding of language patterns and structures, LLMs are capable of crafting creative and intricate poetry, mimicking various styles and forms.

- Generating Computer Code: These models aid programmers by generating, debugging, and optimizing code, which can accelerate development processes and reduce error rates.

- Creating Music: From composing new pieces to arranging existing melodies, LLMs apply their pattern recognition skills to create music that spans genres and styles.

- Enhancing Customer Service: By powering chatbots and virtual assistants, LLMs handle customer queries with increasing precision, providing real-time, personalized responses that improve customer engagement.

- Improving Search Engine Results: LLMs enhance search engines by better understanding the context of queries, leading to more accurate and relevant search outcomes.

- Automating Routine Documentation: Particularly in legal and medical fields, LLMs streamline documentation processes, efficiently generating and summarizing essential documents.

- Versatility in Business Operations: The adaptability of LLMs enables their application across various industries, revolutionizing interactions, enhancing productivity, and optimizing operational efficiencies.

Despite their impressive capabilities, large language models come with their own set of challenges. The primary concern is bias—since these models learn from existing data, they can inadvertently perpetuate the biases present in that data. Moreover, the environmental impact of training and running such large models is significant, given the extensive computational resources required. There is also ethical concern about the use of these technologies in creating misleading information or deepfakes, which can be difficult to distinguish from genuine content.